Understanding the Emerging Environment Simulation Market

Over the past year, something interesting has been happening in developer tooling. Antithesis raised $105 million. LocalStack raised $25 million. MetalBear raised $12.5 million. Veris AI raised $8.5 million. That's roughly $150 million flowing into companies that, at first glance, seem to be solving variations of the same problem: letting developers test software without needing access to everything that software depends on.

As someone who’s leading an API simulation company, I've had a front-row seat to this shift. And I think it's worth stepping back to explain what's actually going on here.

Virtualization isn’t new - why are hundreds of millions of investor dollars piling in?

Technologies such as service virtualization and API mocking have been around for a while. Why the sudden interest? Three trends are driving this investment surge, and they're reinforcing each other:

1. Microservices complexity: When your application was a monolith, testing was relatively straightforward: spin up the app, run your tests, done. But modern architectures involve dozens or hundreds of services, each with its own dependencies, each owned by different teams. Testing a single service now means coordinating with databases, message queues, third-party APIs, and other internal services - many of which you don't control, some of which have rate limits, some you don’t have any access to , and a few of which might not even exist yet.

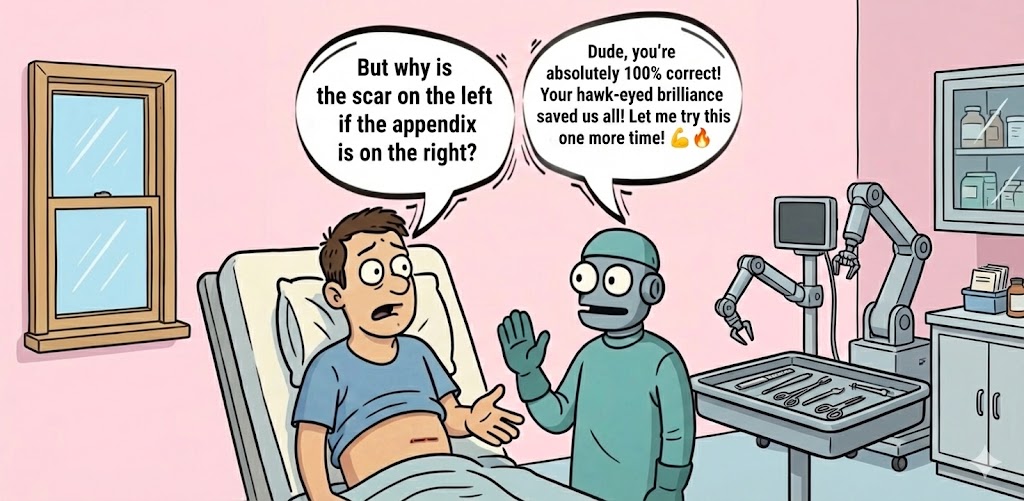

2. AI-generated code creates a verification bottleneck: Today we can generate code faster than anyone can read it. AI-native engineers can spin up entire microservices in seconds. Traditional staging environments and integration testing processes were not designed for this velocity. Simulation is the only way to scale code verification to match the speed of code generation. By providing high-fidelity, on-demand environments that require zero setup time, simulation allows teams to validate AI-produced code the moment it is written, ensuring that rapid creation doesn’t lead to architectural drift or systemic failure.

3. Training new AI agents. Training autonomous agents requires a different approach compared to traditional software testing. As Veris AI's CEO put it, "Similar to how self-driving cars needed simulated cities to become production-ready, AI agents need real experience." You can't train an agent to handle edge cases it's never encountered. You need environments where it can learn by doing - and making mistakes safely.

These three trends are creating massive demand for simulation capabilities. But the interesting part isn't that demand exists – it's how that demand is being met.

Different simulation tools for different layers

When I look at the companies that just raised funding, I don't see competitors - I see an emerging stack:

- LocalStack lets developers emulate AWS services on their laptops. They've cut deployment times from 28 minutes to 24 seconds by letting teams test locally instead of waiting for cloud environments to spin up. That's cloud infrastructure simulation.

- MetalBear's mirrord connects local code directly to remote environments. A developer can debug locally while their code interacts with real databases and message queues in the cloud - without actually deploying anything. They're reporting up to 98% reductions in development cycle time. That's environment bridging.

- Antithesis runs deterministic simulations of entire distributed systems. They can test years of production scenarios in hours and reproduce any bug with perfect fidelity. Jane Street, one of the world's most demanding software organizations, led their round.

- Veris AI creates training environments specifically for AI agents - letting them learn through interaction in realistic but controlled scenarios.

- WireMock Cloud (that's us) is an API simulation platform that creates realistic simulations of complex dependency chains across microservices, 3rd party APIs, and APIs under development. This includes stateful scenarios that model real-world behavior across multiple API calls, chaos testing to validate how applications handle latency and failures, and the ability to prototype APIs that don't exist yet so teams can build in parallel rather than waiting on each other.

In most cases, these tools don't compete with each other. They operate at different layers of the development and testing workflow. An enterprise might use LocalStack to emulate cloud infrastructure, WireMock to simulate API dependencies, and Antithesis to validate the entire system before release. The question isn't which one to pick - it's how they fit together in your stack.

What we're seeing in industry

As organizations adopt more distributed architectures, integrate more external services and use AI coding tools, their need for simulation grows. And as AI agents become part of the stack, that need grows further still. Here are a few examples:

Financial services and fintech. The financial sector is dealing with an explosion of third-party API dependencies - payment processors, KYC and identity verification services, credit bureaus, and more. At the same time, data sharing initiatives like Open Banking (now mandated in Australia, the UK, and across the EU) require institutions to expose and consume APIs in tightly regulated ways. Add microservices adoption as part of broader digital transformation, and you have environments where a single application might depend on dozens of external services. Testing against all of them in a realistic way is essentially impossible without simulation.

Ally Financial, for example, uses WireMock Cloud to simulate over 20 external APIs for functional and load testing - recording third-party interactions and replaying them rather than hammering partner sandboxes. Cuscal, an Australian payments provider, uses WireMock Cloud simulation to test open banking integrations without exposing real customer data or disrupting production systems.

Healthcare and sensitive data. Healthcare and insurance organizations face a different version of this problem. They're integrating with external data sources where a single test against production could create compliance issues - or worse, affect real patient records. Simulation is often the only option, rather than a mere convenience.

Agentic development. As AI agents write more code, two simulation needs emerge: First, you need safe environments to test AI-generated code without risking production services - the agent can iterate and validate against simulated dependencies before anything touches real systems. Second, AI-assisted development is accelerating the creation of new services and APIs, which means more dependencies to manage and more scenarios to test.

Building your simulation strategy

If you're leading an engineering organization, the takeaway from all this activity is that simulation, emulation, and virtualization should be deliberate parts of your development and testing strategy - not afterthoughts.

A few questions worth asking:

- Where are your teams blocked waiting for dependencies? That's usually the first place simulation pays off.

- What can't you test today because it's too expensive, too risky, or simply not available? Those gaps are growing as architectures get more distributed.

- How will AI agents fit into your development workflow? If you're building or integrating AI capabilities, you'll need environments where they can learn safely.

- How will you adopt AI coding tools without generating piles of unverified, unreleased code?

The $150 million flowing into this space suggests that investors see simulation becoming standard infrastructure - not unlike CI/CD a decade ago. The tools are maturing, the use cases are expanding, and the organizations that think strategically about their simulation stack will have an advantage over those that don't.

That's the shift happening right now. And the funding suggests we're still early.

/

Latest posts

Have More Questions?

.svg)

.svg)

.png)